Several weeks ago Vanity Fair published and article by NY Times columnist Maureen Dowd,— Elon Musk’s Billion-Dollar Crusade to Stop the AI Apocalypse. “Elon Musk is famous for his futuristic gambles, but Silicon Valley’s latest rush to embrace artificial intelligence scares him,” noted Dowd. “And he thinks you should be frightened too.”

Entrepreneur and inventor Elon Musk is one of a number of world-renowned technologists and scientists who have expressed serious concerns that AI might be an existential threat to humanity, a group that includes Stephen Hawking, Ray Kurzweil and Bill Gates. But, the vast majority of AI experts do not share their fears. A few months ago, Stanford University’s One Hundred Year Study of AI project published a report by a panel of experts assessing the current state of AI. Their overriding finding was that:

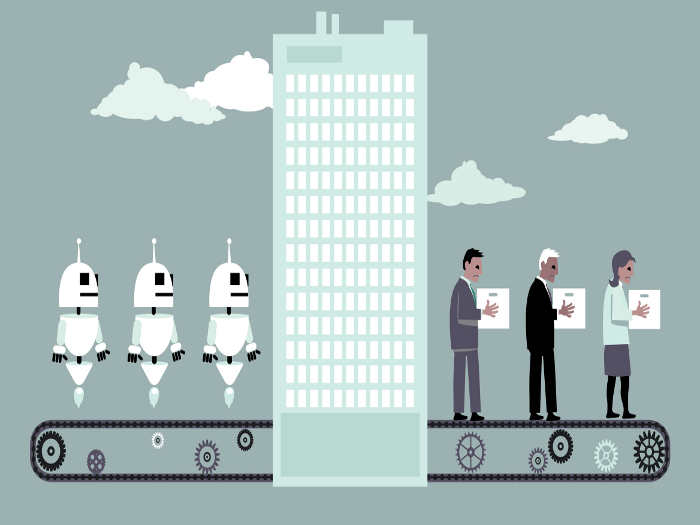

“Contrary to the more fantastic predictions for AI in the popular press, the Study Panel found no cause for concern that AI is an imminent threat to humankind. No machines with self-sustaining long-term goals and intent have been developed, nor are they likely to be developed in the near future. Instead, increasingly useful applications of AI, with potentially profound positive impacts on our society and economy are likely to emerge between now and 2030, the period this report considers. At the same time, many of these developments will spur disruptions in how human labor is augmented or replaced by AI, creating new challenges for the economy and society more broadly.”

Just because experts conclude that — at least for the foreseeable future — AI does not pose an imminent threat to humanity, doesn’t mean that such a powerful technology isn’t accompanied by serious challenges that require our attention.

Earlier this year, the World Economic Forum published Global Risks Report 2017, its 12th annual study of major global risks. As part of the study, the WEF conducted a survey in which it asked respondents to assess both the positive benefits and negatives risks of twelve emerging technologies. Artificial intelligences and robotics received the highest risk scores, as well as one of the highest benefits scores.

The WEF noted that unlike biotechnology, which also got high benefits and risks scores, AI is only lightly regulated, despite “the potential risks associated with letting greater decision-making powers move from humans to AI programmers, as well as the debate about whether and how to prepare for the possible development of machines with greater general intelligence than humans.”

A concrete case in point is predictive policing — the use of data and AI algorithms to automatically predict where crimes will take place and/or who will commit them. As explained in a recent article in Nature, “tight policing budgets are increasing demand for law-enforcement technologies. Police agencies hope to do more with less by outsourcing their evaluations of crime data to analytics and technology companies that produce predictive policingsystems. These use algorithms to forecast where crimes are likely to occur and who might commit them, and to make recommendations for allocating police resources. Despite wide adoption, predictive policing is still in its infancy, open to bias and hard to evaluate…”

Continue reading the full blog on Medium here.