Transparency: The First Step to Fixing Social Media

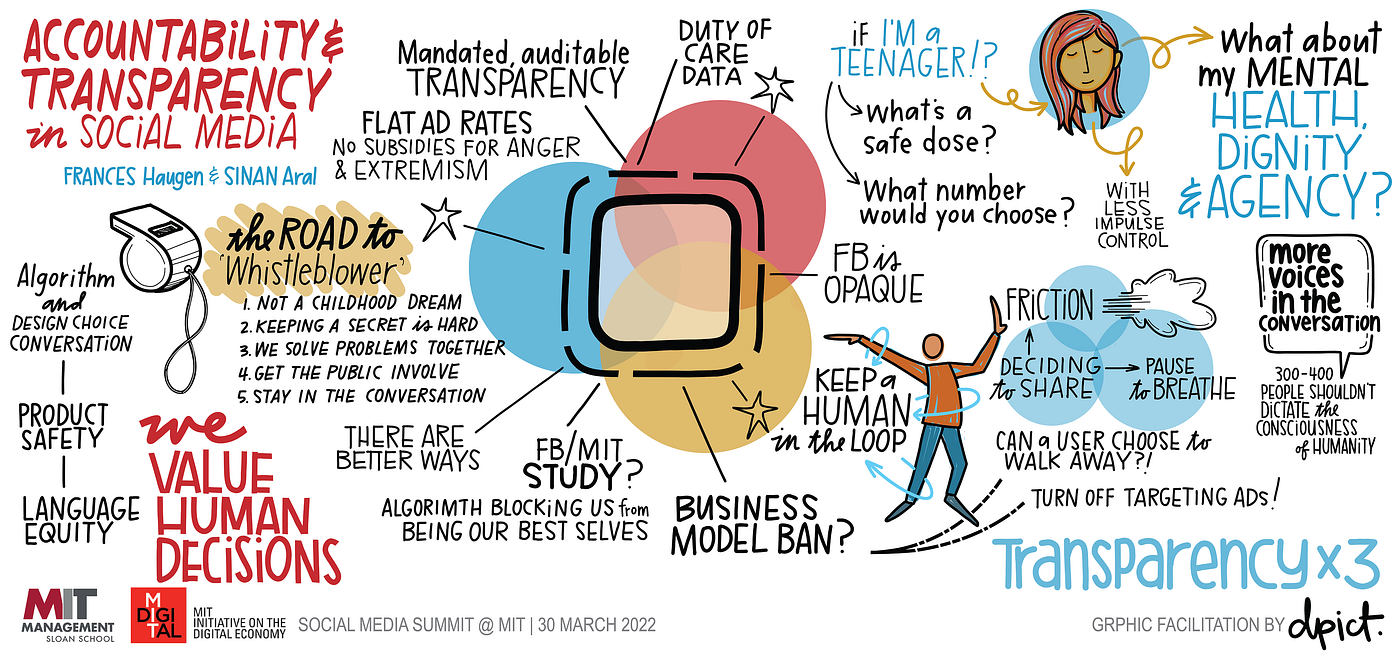

Experts — including Facebook whistleblower, Frances Haugen — call for algorithmic transparency at MIT Social Media Summit

By Peter Krass

Social media companies are transforming society. Yet the inability to inspect their underlying assumptions, rules and methodologies is causing a backlash.

Greater transparency — that is, insight into the algorithms and assumptions that power social-media platforms — was a clarion call at this year’s MIT Social Media Summit (SMS) hosted by the Initiative on the Digital Economy (IDE). Greater transparency might mean Facebook revealing its source code to software engineers, Twitter sharing data with the public, or YouTube explaining how and why it recommends new videos to users. While that may sound straightforward, implementing better algorithmic accountability has many thorny nuances: Transparency for whom? And toward what end?

These were among the questions addressed at the second MIT SMS on March 31. The event attracted more than 12,000 virtual attendees and remote speakers from around the globe. Experts laid out the new reality: Technologies — including algorithms, artificial intelligence (AI) and machine learning — are exerting huge, far-reaching impacts across society. The reverberations of their influence affect such important issues as the mental health of teenagers, the public’s acceptance of COVID-19 vaccines, and the outcomes of national elections.

But the lack of public scrutiny of these technologies, such as how they work and how to correct their harmful effects, is frustrating users, academics and governments, according to several of the day’s panelists.

For instance, the dearth of programmers and other data professionals who develop social media algorithms is a big problem: “There are only 300 to 400 algorithm experts worldwide,” said Frances Haugen, a former Facebook product manager turned whistleblower who was also the day’s first featured speaker. These elites “shouldn’t be able to decide how social media works. There aren’t enough people at the table,” she said. Moreover, they need better direction about the implications of their designs on public safety.

Haugen, an electrical engineer who describes her current role as a defender of “civic integrity,” last year released some 10,000 documents from Facebook, (renamed Meta in 2021), to the media and Congress. At the SMS she told IDE Director Sinan Aral why she had to call out her employer’s practices and what regulators and business leaders can do about the potentially harmful influence of algorithms that attract and keep people on their sites.

Ubiquitous Appeal

First, they need to recognize the problems their ubiquity cause. As the largest social media platform in the world, Meta now has 2.85 billion monthly active users.

Six of every 10 people who use the internet anywhere in the world check their Facebook feeds at least once a month, Haugen says.

That’s why the company’s secrecy is literally dangerous to people’s lives in countries where free speech is at risk such as Myanmar and Ethiopia, she said. Top executives cannot ignore the perils of their products for the sake of profits.

In Myanmar, Haugen explained, there’s a precarious balance between online community and threats to democracy. While the company has been sued by Rohingya refugees for its failure to stop the spread of online hate speech that contributed to violence, Haugen said Meta had only one speaker of Burmese among its entire staff, currently estimated at nearly 72,000 employees. “It doesn’t matter what language you speak, you deserve to be safe” while using the platform, Haugen said. “Facebook invested so little in Myanmar, even though it knew this was an area that was fragile.”

Haugen describes Meta, where she worked as a product manager from 2019 to 2021, as a very “opaque system” where the algorithms can be “addictive” by design–attracting views for advertisers “Algorithms don’t know if a topic is good for you or bad; all they know is some topics really draw people in,” she said. Anger, hate and extremism get views for advertisers; that’s the business model. The platform “has trouble seeing” that content amplification and constant feeds can be addictive, especially to children, teens and young people, she said.

Since leaving the company, Haugen has worked with others on ways to ensure safety on social media platforms — especially for children. Because Meta doesn’t make its algorithmic data available, “no one gets to see behind the curtain and they don’t know what questions to ask,” she said. “So what is an acceptable and reasonable level of rigor for keeping kids off these platforms, and what data would [the platforms] need to publish to understand whether they are meeting the duty of care?” More protective guardrails are needed, she argues.

Political Impact

While Meta may be one of the most prominent offenders, calls for greater transparency were made by other speakers throughout the day.

“What is the impact of social-media algorithms on politics?” asked Kartik Hosanager, professor of operations, information and decisions at The Wharton School. “We can’t answer, because the data is mostly kept within the platforms.” For example, Hosanager said, Twitter conducted a study on its news feeds and “while I don’t question the results of the study…all these studies go through internal approvals.” In other words, “we don’t know what we don’t know” because information is not released to the public.

Speaking on a panel about algorithmic transparency led by MIT Assistant Professor and IDE co-leader Dean Eckles, Hosanager called for tech firms to open their source code to the public and academic researchers. “Platforms have too much control over the understanding of these phenomena. They should expose it to researchers.”

Eckles noted that new interfaces that give social-media users greater control over what they see may represent a new business opportunity. “That seems like a great area for a lot of potential innovation,” he said.

Daphne Keller, director of the Program on Platform Regulation at Stanford University’s Cyber Policy Center, said that some social-media algorithms display content that’s either against a person’s own interests or biased because of recommendation engines that amplify views:

The more people view content, the more prevalent it becomes. “But you can’t really measure [the effect]. We don’t know what the baseline is,” she said.

While speakers offered a range of solutions — from greater openness on the part of social-media companies to new legislation — they all agreed on the need for greater visibility into the underlying assumptions behind the social media.

Highlights from other panels during the Summit include:

- Dave Rand, an MIT professor and IDE research lead, discussed how much misinformation on social media is due to human nature versus how much is from algorithms delivering content people wouldn’t otherwise see.

- Renée Richardson Gosline, a Senior Lecturer at MIT Sloan and an IDE lead, noted that her MBA students, many of whom want to launch disruptive tech startups, “lag” when it comes to ethics.

- Natalia Levina, an NYU professor who grew up in Ukraine, observed that social media has surprisingly fostered a revival of personal networks.

Peter Krass is a contributing writer and editor.